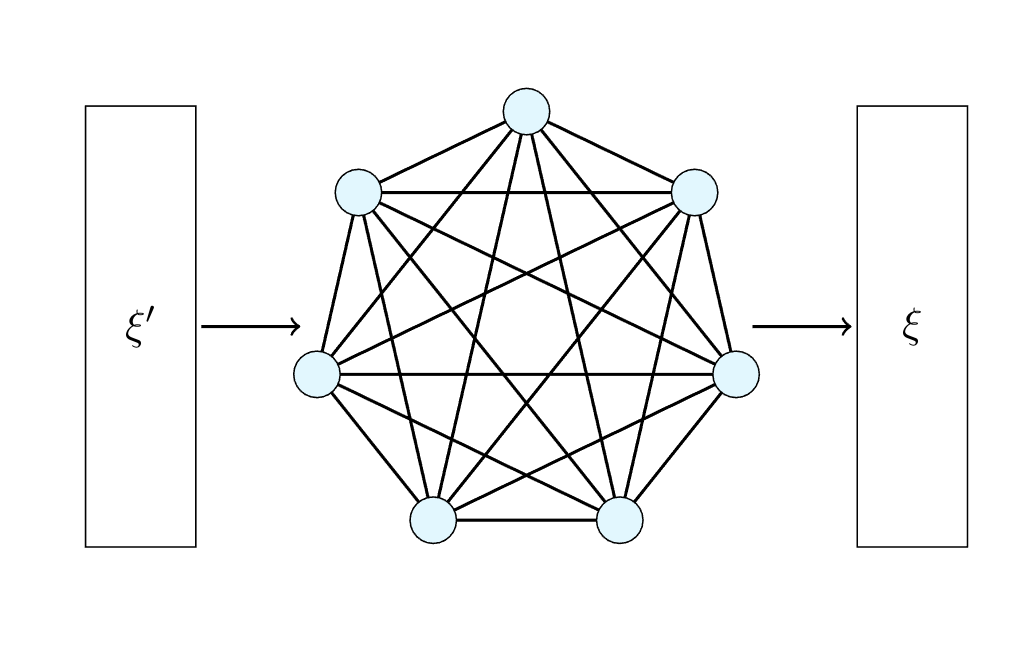

Associative memories are a class of neural network that learn to link a probe state with a target state. These models are useful in studying a variety plethora of behaviours, such as human cognition, magnetic materials, and chaotic attractor spaces. We are interested in the foundational properties of these systems, and study the behaviours of some associative memories, both in theory and practice. We have found a rigorous relationship between Hebbian learning and prototype formation, which has links to psychology and category-prototype theory, and shows that some spurious states of the Hopfield network are useful for recall. We have also studied the Dense Associative Memory, an abstraction of the Hopfield network, and made the network significantly more stable.

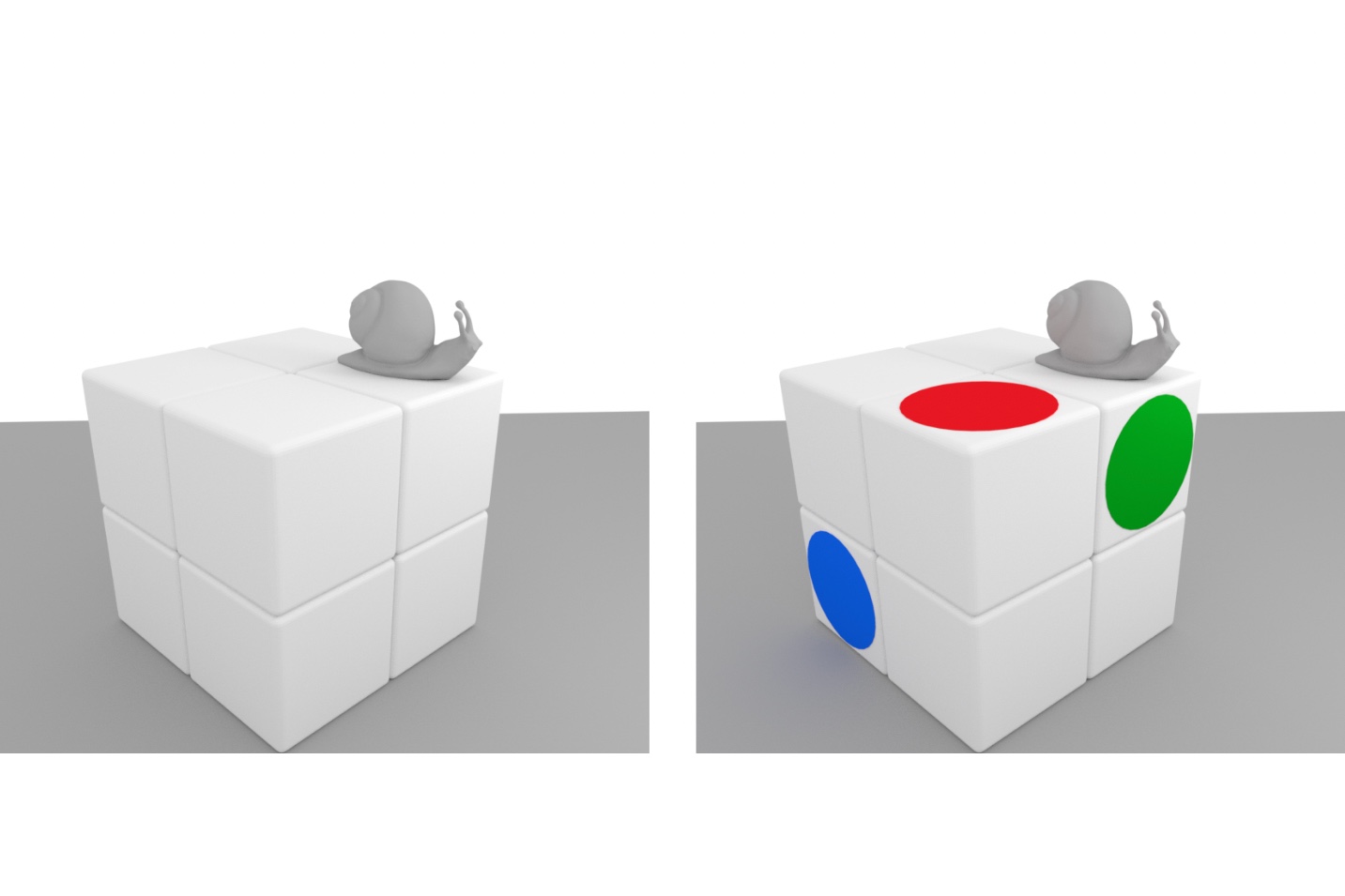

How does the brain represent the geometry of 3D objects? Most researchers considering this question focus on vision. However, infants first learn about 3D objects in the haptic system -- that is, by tactile exploration of objects. In this project, we develop a neural network model that learns something about the structure of a 3D cuboid, using input from the motor system that controls a simulated hand navigating on its surfaces. It does this with a simple unsupervised network, that learns to represent frequently-experienced sequences of motor movements. The network learns an approximate mapping from agent-centred (i.e., egocentric) movements to object-centred (i.e., allocentric) locations on the cuboid's surfaces. We also show how this mapping can be improved by the addition of tactile landmarks, by the presence of asymmetries in the cuboid and by the supplement of agent's configurations. We then investigate how the learned geometry of the cuboid can support a reinforcement scheme, that enables the agent to learn simple paths to goal locations on the cuboid.

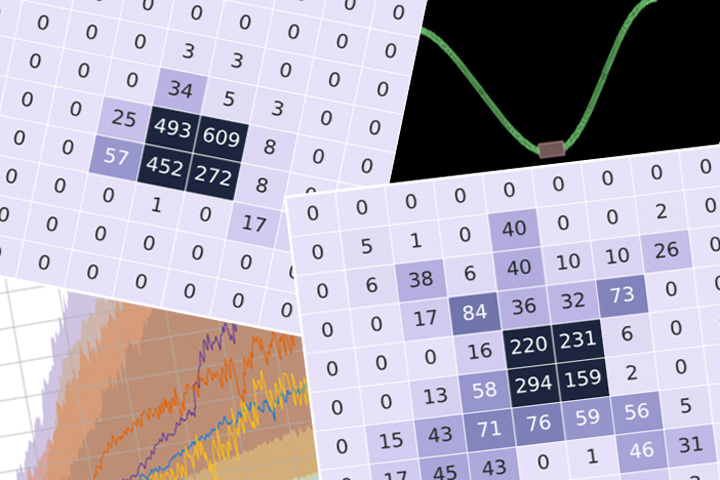

Surprise has been cast as a cognitive-emotional phenomenon that impacts many aspects from creativity to learning to decision-making. Why are some events more surprising than others? Why do different people have different surprises for the same event? In this project, we try to seek a reasonable definition of "surprise" and apply it in reinforcement learning. A surprise-driven agent can learn to explore without knowing any reward system from the environment. This is done by creating a model of the environment. "Surprise" is the inconsistency between the model prediction and observed environment outcome. Agents learn in a reinforcement learning environment by maximizing this “surprise”.

The overarching aim of this project is to develop a next generation data infrastructure linking farm-management, genetic-improvement and traceability. The objective here is to prove feasibility of using image analysis, particularly latest advances in deep learning, for parentage assignment in livestock using sheep as an exemplar. For example, given we have a facial image from a lamb and multiple pictures of possible fathers (rams) and mothers (ewes) the goal is to correctly identify the parents of this lamb. Rather than requiring manual identification of phenotypes for each new sheep, we aim to create and train a convolutional neural network (CNN) model capable of detecting features for matching parents with their offspring directly from images of animal faces.

People are more interesting than machines. How do our brains and minds work? AI gives us computational tools to these questions, from modelling brain function at a cellular level to exploring aspects of cognition like memory and language. Work on this project has focused on learning and forgetting in artificial neural networks and real brains, and the nature of real and false memories in dynamical neural network systems. After a long break, it is time to get this project moving again.

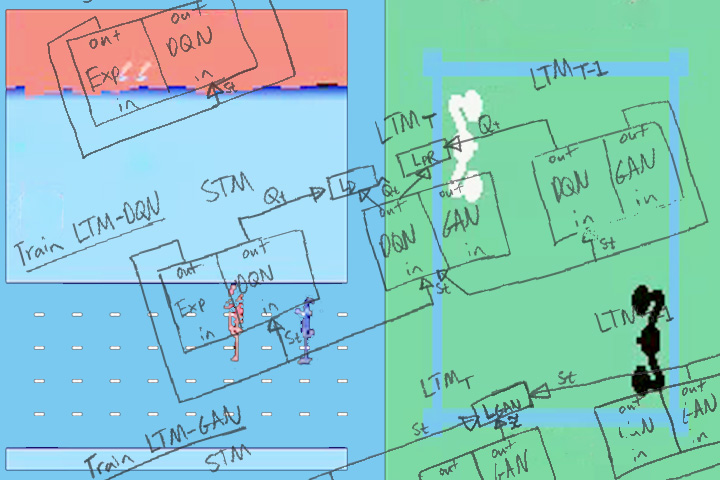

Neural networks can achieve extraordinary results on a wide variety of tasks. However, when they attempt to sequentially learn a number of tasks, they tend to learn the new task while destructively forgetting previous tasks. This is known as the Catastrophic Forgetting problem and it is an essential

problem to solve if we want to achieve artificial agents that can continuously learn. This project aims to solve Catastrophic Forgetting by having the network rehearse previous tasks while learning new ones. Previous tasks are rehearsed from examples produced by a single generative model that is also sequentially trained on all previously learnt tasks.