Surprise-driven reinforcement learning

Abstract

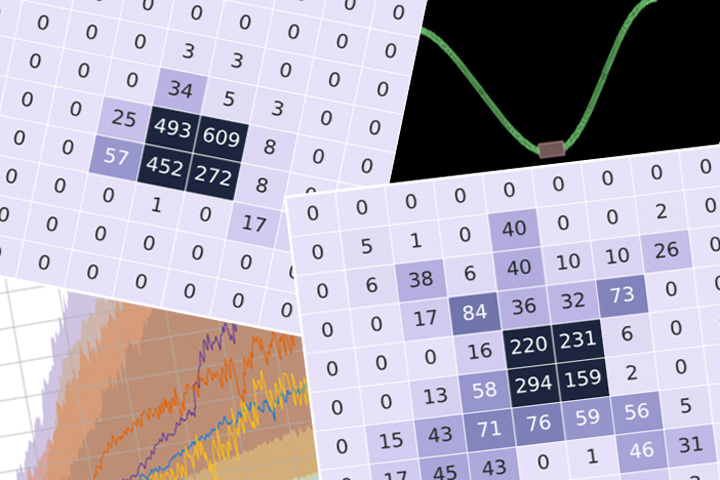

Surprise has been cast as a cognitive-emotional phenomenon that impacts many aspects from creativity to learning to decision-making. Why are some events more surprising than others? Why do different people have different surprises for the same event? In this project, we try to seek a reasonable definition of "surprise" and apply it in reinforcement learning. A surprise-driven agent can learn to explore without knowing any reward system from the environment. This is done by creating a model of the environment. "Surprise" is the inconsistency between the model prediction and observed environment outcome. Agents learn in a reinforcement learning environment by maximizing this “surprise”.

Personnel

- Haitao Xu (PI)

- Brendan McCane (Primary Supervisor)

- Lech Szymanski (Supervisor)

Tags

Surprise-Driven Learning, Reinforcement LearningRelated Publications

VASE: Variational Assorted Surprise Exploration for Reinforcement Learning. IEEE Transactions on Neural Networks and Learning Systems, 34(3):1243-1252, 2023.

Bibtex has been copied to clipboard.

Intrinsic reward driven exploration for deep reinforcement learning. PhD thesis, University of Otago, 2021.

Bibtex has been copied to clipboard.

MIME: Mutual Information Minimisation Exploration. arXiv preprint arXiv:2001.05636, 2020.

Bibtex has been copied to clipboard.