Solving Catastrophic Forgetting in Deep Neural Networks

Abstract

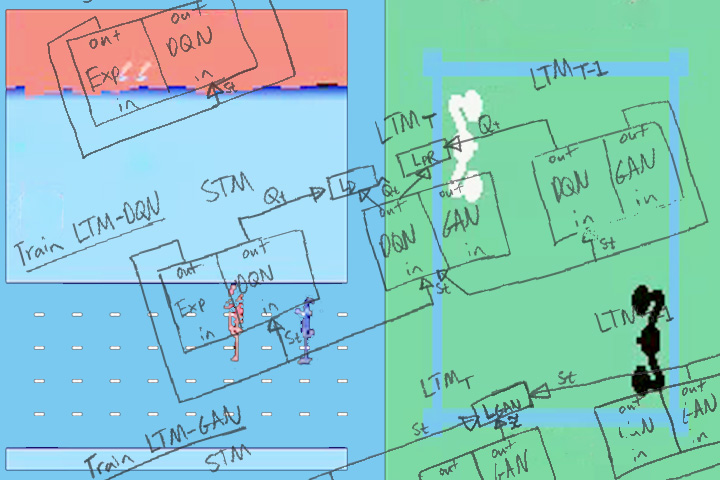

Neural networks can achieve extraordinary results on a wide variety of tasks. However, when they attempt to sequentially learn a number of tasks, they tend to learn the new task while destructively forgetting previous tasks. This is known as the Catastrophic Forgetting problem and it is an essential

problem to solve if we want to achieve artificial agents that can continuously learn. This project aims to solve Catastrophic Forgetting by having the network rehearse previous tasks while learning new ones. Previous tasks are rehearsed from examples produced by a single generative model that is also sequentially trained on all previously learnt tasks.

Personnel

- Craig Atkinson (PI)

- Brendan McCane (Primary Supervisor)

- Lech Szymanski (Supervisor)

- Anthony Robins (Supervisor)

Tags

Catastrophic Forgetting, Deep Learning, Reinforcement Learning, Convolutional Neural NetworksRelated Publications

Pseudo-rehearsal: Achieving deep reinforcement learning without catastrophic forgetting. Neurocomputing, 428:291 - 307, 2021.

Bibtex has been copied to clipboard.

Achieving continual learning in deep neural networks through pseudo-rehearsal. PhD thesis, University of Otago, 2020.

Bibtex has been copied to clipboard.

GRIm-RePR: Prioritising Generating Important Features for Pseudo-Rehearsal. arXiv preprint arXiv:1911.11988, 2019.

Bibtex has been copied to clipboard.

Pseudo-recursal: Solving the catastrophic forgetting problem in deep neural networks. arXiv preprint arXiv:1802.03875, 2018.

Bibtex has been copied to clipboard.